I'm following along with day 018 (Enforcing A Video Frame Rate) using Linux. I'm using nanosleep and with the default scheduler policy SCHED_OTHER and setpriority(PRIO_PROCESS, getpid(), -20) it is unable to reliably wake up from sleep at my requested time. I thought about setting the scheduler policy to SCHED_FIFO and setting sched_get_priority_max(SCHED_FIFO) and the nanosleep would yield the cpu to other tasks. With other processes running like xorg I thought this may cause issues for the end-user. So, the only way I can see is to do the while(1) loop and just 'melt' the CPU. Does anyone have any suggestions/experiences with this on Linux? Thanks.

One crazy idea I explored a while ago was to predict the noise on the system by sampling recent history and make an educated guess as to how much to sleep for. I was able to cancel system noise quite well this way but I think my code would go off the rails in other cases so I'm neither using it nor going to share it. If someone does a provably robust version of that idea I would love to see it.

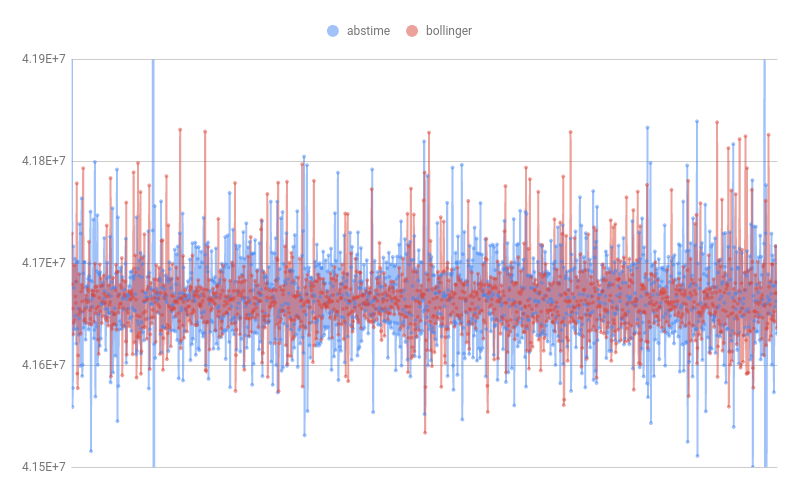

05:49 <neo> this is a minute of timing data for abstime and a minute of timing data for my new version, note that the chart cuts off some of the abstime datapoints because the outliers for it are large

05:50 <neo> abstime min-max 4.0x10^7 to 4.3x10^7

05:50 <neo> my ver min-max 4.15x10^7 to 4.18x10^7

05:51 <neo> abstime stdev: 73518.51835

05:51 <neo> my ver stdev: 35637.30126

To more helpfully answer your question,

17:54 <Discord> <Croepha (Dave from SF)> Hey, anyone have insights on why epoll_wait uses the CLOCK_MONOTONIC clock instead of the CLOCK_MONOTONIC_RAW clock? it seems like if you are holding off some action based on some delay (like a retry or a throttle) you wouldn't want that to be affected by NTP or clock drift... I don't have much experience with adjtime, it may be that it is always only a trivial amount of drift...

04:40 <Discord> <Croepha (Dave from SF)> so, looking at this: https://github.com/ntp-project/ntp/blob/9c75327c3796ff59ac648478cd4da8b205bceb77/ntpdate/ntpdate.h#L64 it looks like adjtime is only used for sub-second adjustments, so, using CLOCK_MONOTONIC seems fine then

05:16 <neo> @Croepha what clock would you recommend for frame capping an emulator? I went with using clock_nanosleep with CLOCK_MONOTONIC and TIMER_ABSTIME but I haven't thought deeply about it, I just want to consistently present frames at a fixed interval without frame drift etc

05:16 <neo> https://gitlab.com/-/snippets/2135217#LC1057

16:02 <Discord> <Peter Fors> @neo I did a little test to see stability of nanosleep, there were some fluctuations when waiting the entire time with nanosleep, so I sleep the last ms with a poll-loop with clock_gettime https://pastebin.com/c0fcb8Ry if you are helped with that

16:04 <Discord> <Peter Fors> On my machine the furthest from 10ms is +20us or so over 10 minutes

06:53 <Discord> <Croepha (Dave from SF)> @neo sorry, wasn't ignoring you but the @ wasn't pinging me... probably has something to do with discord seeing you as a bot or something.... but I think I would use clock_nanosleep as you were doing, I think that is the best you can get in linux, as far as I can tell

TL;DR: Peter's technique is to sleep less than needed and then busy-loop to burn the remaining time e.g. last 1ms

I think it comes from windows implementation - there Casey used timeBeginPeriod that changes sleep granularity in whole OS down to 1 millisecond. Then you can sleep down to last millisecond and then busy-loop last millisecond or less.

In newer Windows 10 versions they changed that timeBeginPeriod does not affect other processes as much - it is still not completely process-local, but affects system way less than it used to: https://randomascii.wordpress.com/2020/10/04/windows-timer-resolution-the-great-rule-change/