I am having problems with the queue wrapping. I watched Casey implement the queue a few times. He did attempt to test the wrapping by setting the array to store 10 elements and then pushed 10 strings before sleeping for 1 second. The program did not run correctly so he changed the array to 4096 and still had issues running the program. Timestamp: https://youtu.be/0jfDwujUY4Y?t=3082

Then he changed the ordering that queue->NextEntryToRead is retrieved, and then the program ran. But, I did not see Casey proceed to verify that wrapping actually was working. I tried it and it sometimes works, but sometimes it does not. I even took the threads out of the equation and just ran the Win32CompleteAllWork to have the main thread complete all the jobs. The logic appears to be busted as the NextEntryToRead will equal the NextEntryToWrite if you wrap around.

u32 newNextEntryToWrite = (queue->NextEntryToWrite + 1) % ArrayLength(queue->Entries);

You can see from the above output that the NextToWrite will wrap around to 0(and because this is using a single thread) the NextToRead will also be 0. It doesn't seem like wrapping would work even with multiple threads. So, am I safe to assume that we are just banking on not wrapping around by setting the entry size larger than what we will use and instead, just using a recyclable buffer for the Entries?

Update: After playing around with a bit more, I think the problem only exists if we happen to push enough elements on the queue such that it wraps around, before any thread is able to complete the work to update the next entry to read. This may explain why it was working sometimes and other times it would not...Because both NextRead and NextWrite are zero at the start, so if NextWrite wraps around while we are adding Enties before any work has been done, the logic fails because NextRead and NextWrite are never different.

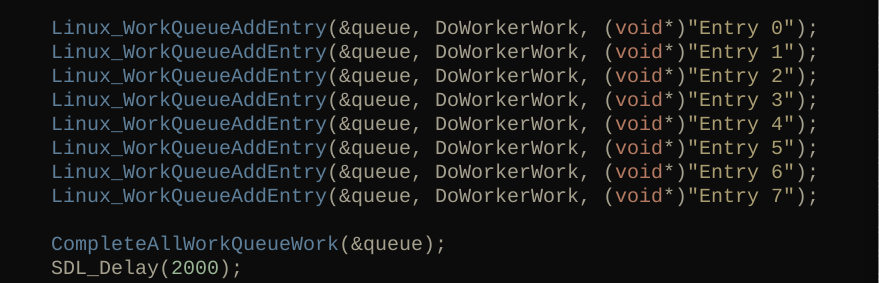

I used a for loop to add several strings(1 less element than the array length) to print as work. I called the CompleteAllWork function after adding entries and there did not seem to be any issue. This seems to imply that the queue wrapping is working. However, it does not work if we happen to add just enough work to wrap around before any work has been completed. We have to get the balls rolling so that the NextWrite is ahead of the NextRead.

Hard for me to describe concisely...So hopefully the following code example will demonstrate what I mean...and help anyone else having the same issue:

I didn't look precisely at your code but I believe it's the issue discussed here : Question on / possible problem with multithreading queue

There is a reply of Casey about that issue on github (copied below) https://github.com/HandmadeHero/cpp/issues/103

I feel like we've talked about this many times before, but I can re-look. I believe even at the time it was written, I said specifically that we're only handling our specific case. There's not a lot of reason for us to leave it in there, though, because it would be easy enough for us to just replace it with a linked list or something which cannot fail and also doesn't have length limits. If people are going to be using this for general multithreading then we should probably do that.

- Casey

Thank you for the links. I am not sure that was the issue that I was encountering. Then again I am still trying to wrap my head around multi-threading. :)

The problem I had was with adding enough elements to wrap around the entire buffer. Well, I think it was because if I added elements n-2 of the buffer size the problem went away... i.e.

int array[8]; array[0] = 1; . . . array[6] = 6; //NextToWrite is array[7]

However, adding n-1 elements would sometimes succeed and sometimes fail...

int array[8]; array[0] = 1; . . . array[7] = 6; //NextToWrite is array[0]

I think this would sometimes work because a thread would jump in there and start working on the added entry before we wrapped around. But, sometimes we would add enough entries to wrap back around to 0 before a thread would start working and no work would be completed because the following condition would not be true.

if(originalNextEntryToRead != queue->NextEntryToWrite)

Like I said, this problem went away once I added any amount of entries less than the buffers capacity. Such that: NextToWrite <= ArrayLength(array) - 1.

I even made a simple function to use the queue in the game loop and let it run for an hour. There was never a problem. Hardly a rigorous experiment, but I think the queue is working so long as a thread can get in there and start working on an entry before the NextToWrite wraps around to equal whatever the NextToRead is. Thinking about it now that was a question in the episode that someone asked I didn't, until now, associate that with my problem. But, locking the main thread to try and work on some of the entries or wait for all entries to be completed before continuing should prevent that problem from occurring. In addition to setting the entry array capacity to something like 256 should also prevent that problem from occurring. I mean why would we even have something like 256 entries anyway? We may have a combination of physical/virtual cores adding up to 4, 8, 16, or 32...lol

Thank you again for your response.

The assert in Linux_WorkQueueAddEntry isn't the same as in handmade hero. You're just asserting that we are in the bounds of the array (which is guaranteed by the modulo), while handmade hero asserts that NewNextEntryToWrite isn't equal to Queue->NextEntryToRead. Which means that HMH's code will trigger the assert if you try to add an entry while the queue is full.

internal void Win32AddEntry(platform_work_queue *Queue, platform_work_queue_callback *Callback, void *Data) { // TODO(casey): Switch to InterlockedCompareExchange eventually // so that any thread can add? uint32 NewNextEntryToWrite = (Queue->NextEntryToWrite + 1) % ArrayCount(Queue->Entries); Assert(NewNextEntryToWrite != Queue->NextEntryToRead); platform_work_queue_entry *Entry = Queue->Entries + Queue->NextEntryToWrite; Entry->Callback = Callback; Entry->Data = Data; ++Queue->CompletionGoal; _WriteBarrier(); _mm_sfence(); Queue->NextEntryToWrite = NewNextEntryToWrite; ReleaseSemaphore(Queue->SemaphoreHandle, 1, 0); }

I mean why would we even have something like 256 entries anyway? We may have a combination of physical/virtual cores adding up to 4, 8, 16, or 32...lol

Most of the time I use a queue that is a lot bigger than the number of CPU core/threads. I might have a thousand tasks that need to be accomplished and I don't want to manage when to add a task in the queue. You just queue them all and let the threads do their thing.

Well that Assert would have definitely caught my problem! My goodness...lol. Thank you.

I did think that we may want to queue up several tasks to divide among a bunch of threads. But, I suppose that would be interesting to queue up a lot of work and let the thread(s) do their stuff. Would you use some sort of bool, or todo/complete variables to test when the thread(s) are done with that batched work?

I want to clarify that I have very little experience with multi-threading.

Also just in case it wasn't clear, having a queue with a lot of entries doesn't mean that we use a lot of threads, we can have a queue of 1024 entries and 1 threads.

My current codebase uses a modified version of handmade hero's queue, so it uses completion goal and completion count.

But in my programs I'm mostly using threads to do asynchronous work (instead of getting more performance), like loading something from the internet without blocking the application if it takes longer than a frame. It doesn't really matter (I think) but that means that I generally don't care if all the tasks are completed, the higher level (the function that execute in the threads, and the code that requested the work) has some way to signal that the work has been done and the result can be processed. But I still have a function to wait for the queue's completion if I need it.

The comment from Casey in my other reply is also to consider. A queue with no limit on how much tasks you can queue is something that I should look into because at the moment if any of my application try to queue too much work, it will just silently fail.

That is cool. I have no experience with multi-threading, so at this stage thinking about something running in the background and the result becomes available at some undetermined(or interval) of time is currently beyond me. I am still in the single thread procedural school of thought. :)

I do understand a lot of what Casey is doing is specific to Handmade hero, but the exposure to new things here is just phenomenal. ...and the presentation is cool too. Too many resources out there start with a trivial example that is not really helpful to do anything useful and then they ramp it up to general purpose solutions that carry a lot of baggage.

Handmade Hero: Here is a potential solution to x, and we currently don't care about x + n.

This is so much easier to follow than the default being solutions to x + n.