All of the OSX platform layers I've seen use OpenGL which is kind of not the same as hand-rolling a software renderer from scratch, no?

Would Quartz2D be about as low as you can go on OSX?

Would Quartz2D be about as low as you can go on OSX?

If you are talking about OSX platform layers posted in this forum, then they use OpenGL only to push bitmap buffer to screen (similar what StretchBlt does in Windows platform layer). The responsibility of preparing contents of bitmap buffer is still for game layer. So these OSX platform layers techincally are still doing software rendering from scratch and only use OpenGL to blit.

But yeah, if you want to avoid OpenGL then you can use Quartz. Create CGImage (with CGBitmapContextCreateImage) or NSImage (with NSBitmapImageRep) from bitmap data and draw that to the window. Only disadvantage will be that blitting probably will become slower.

But yeah, if you want to avoid OpenGL then you can use Quartz. Create CGImage (with CGBitmapContextCreateImage) or NSImage (with NSBitmapImageRep) from bitmap data and draw that to the window. Only disadvantage will be that blitting probably will become slower.

My implementation uses Quartz.

https://github.com/tarouszars/handmadehero_mac

I'm sure by using Quartz instead of OpenGL it is much slower, but it felt closer to casey's implementation this way.

I haven't done much mac programming so I'm sure it is not optimal, but it works.

https://github.com/tarouszars/handmadehero_mac

I'm sure by using Quartz instead of OpenGL it is much slower, but it felt closer to casey's implementation this way.

I haven't done much mac programming so I'm sure it is not optimal, but it works.

I'm pretty sure it'd be slower too. I think Casey knows this as well and specifically avoided using OpenGL himself in order to demonstrate some principles and ideas. I'd like to follow along as closely as I can.

I've done enough OpenGL programming to know what you can do with it (enough to have discovered and fixed a bug in the WebGL VAO extension spec while implementing it in Firefox at least).

Thanks for the link to your implementation. Apple developer reference is pretty comprehensive but I'm not terribly familiar with the APIs and as Casey has mentioned many times, "you have to know what to look for."

I've done enough OpenGL programming to know what you can do with it (enough to have discovered and fixed a bug in the WebGL VAO extension spec while implementing it in Firefox at least).

Thanks for the link to your implementation. Apple developer reference is pretty comprehensive but I'm not terribly familiar with the APIs and as Casey has mentioned many times, "you have to know what to look for."

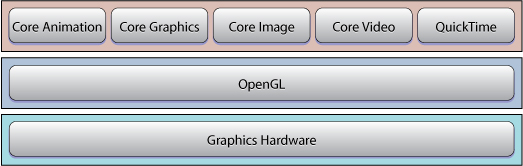

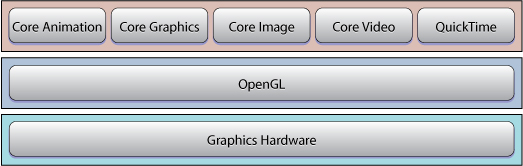

I don't think that by using Quartz/CoreGraphics you are really getting "closer to the metal" on OS X. Apple states that OpenGL "underlies other OS X graphics technologies". Here is a diagram from Apple docs:

On the other hand using CoreGraphics is certainly closer to Casey's implementation from the code structure perspective and it's a lot more understandable for guys who aren't already familiar with OpenGL.

On the other hand using CoreGraphics is certainly closer to Casey's implementation from the code structure perspective and it's a lot more understandable for guys who aren't already familiar with OpenGL.

Interesting. I'll have to dig in more. Is there any recommended guides or advice for digging into OSX graphics?

Here's the Quartz2D guide: https://developer.apple.com/libra...ml#//apple_ref/doc/uid/TP30001066

For the OS X platform layer that I did, I picked OpenGL because:

OpenGL is actually the closest to the metal that you can currently get on OS X. It sits below Quartz. On iOS, you could drop down to Apple's Metal API, but that won't be coming to OS X any time soon.

Also, realize that the current platform layer is using OpenGL just as a dumb bit-blitter. It's not like we're getting a massive speedup by using OpenGL at the moment. You could use Quartz with a CGLayer and probably get similar performance. I tried this early in the project to compare performance and it was in the ballpark of the OpenGL version.

Here's an excerpt of blitting the early bit pattern using a CGLayer:

https://gist.github.com/itfrombit/909b6ee24f31a2746267

-Jeff

- We'll probably use OpenGL in the future for more optimized hardware-accelerated rendering.

- It's very easy to sync OpenGL frame buffer swaps with the display's vertical refresh rate.

- It wasn't much harder to use OpenGL than any other API.

OpenGL is actually the closest to the metal that you can currently get on OS X. It sits below Quartz. On iOS, you could drop down to Apple's Metal API, but that won't be coming to OS X any time soon.

Also, realize that the current platform layer is using OpenGL just as a dumb bit-blitter. It's not like we're getting a massive speedup by using OpenGL at the moment. You could use Quartz with a CGLayer and probably get similar performance. I tried this early in the project to compare performance and it was in the ballpark of the OpenGL version.

Here's an excerpt of blitting the early bit pattern using a CGLayer:

https://gist.github.com/itfrombit/909b6ee24f31a2746267

-Jeff

Jeff, can you explain why you implemented your example code snippet the way you did? Seems much more complicated than necessary to me. I would just go ahead create a CGImageRef from the bitmap data (via CGImageCreate) and draw it via CGContextDrawImage. Certainly less code, and I would guess also faster (but I didn't measure)

I'm sure resurrecting this old thread is poor forum etiquette, but it looks to be very relevant to my interests. Jeff (or anyone else who can help), I'm curious what the simplest version of your platform layer is i.e. what would the analogous OSX implementation be to what Casey had running under windows on say, day 5, where there was just one file and a couple hundred lines of code?

Sealatron

I'm sure resurrecting this old thread is poor forum etiquette, but it looks to be very relevant to my interests. Jeff (or anyone else who can help), I'm curious what the simplest version of your platform layer is i.e. what would the analogous OSX implementation be to what Casey had running under windows on say, day 5, where there was just one file and a couple hundred lines of code?

https://github.com/gamedevtech/CocoaOpenGLWindow is probably a good starting point - it's what I started my OS X platform layer (for another project, but very similar to Handmade Hero) with. You pretty much have to use Objective C (or Objective C++) to do a minimum set of interactions with the platform, but from there calling into C/C++ is easy enough.

https://github.com/nxsy/hajonta/tree/master/source/hajonta/platform is my platform layers for that project - it has three files for osx - one for Objective C++ (osx.mm), one for C++ (osx.cpp) and one header file for common things. It could probably be rewritten in a single Objective C++ file.

Neil

itfrombit

OpenGL is actually the closest to the metal that you can currently get on OS X. It sits below Quartz. On iOS, you could drop down to Apple's Metal API, but that won't be coming to OS X any time soon.

Metal is now available on OS X El Capitan. However, it looks to be just shy of 30% of OS X installs, so it hasn't been widely adopted yet.

Those links are incredibly helpful, thank you! :) I think I follow most of what's going on, but how exactly do you draw to the window once you've created it? Is there an equivalent of StretchDIBits in there? I'm not very familiar with Objective C, so I might not be following that part too well.

in opengl, creating a texture and using glTexSubImage2d() to update the texture data from the host, and then drawing that to the screen as a fullscreen quad is usually the best way to do that. There also glDrawPixels but that can sometimes be slow.

There might be a faster, platform-specific way to do this on OSX, but I don't know what it is. Using Metal will probably be even faster, but I haven't tried that. But it would probably be similar to the to the glTexSubImage approach.

There might be a faster, platform-specific way to do this on OSX, but I don't know what it is. Using Metal will probably be even faster, but I haven't tried that. But it would probably be similar to the to the glTexSubImage approach.

Without OpenGL you can use CGImage to draw to screen.

This is how you do it: http://www.conceptualinertia.net/aoakenfo/cgdataprovidercreatedirect

You need to allocate bitmapProvider and bitmap objects only once. Once you create them, you can change pixel bytes directly in your buffer.

But using OpenGL most likely will be faster. All the modern OS'es uses some kind of 3D API like OpenGL to render to screen. So all other direct "pixel access" API will create OpenGL texture and draw it to screen for you. But you can do it yourself thus saving time on calling legacy API.

This is how you do it: http://www.conceptualinertia.net/aoakenfo/cgdataprovidercreatedirect

You need to allocate bitmapProvider and bitmap objects only once. Once you create them, you can change pixel bytes directly in your buffer.

But using OpenGL most likely will be faster. All the modern OS'es uses some kind of 3D API like OpenGL to render to screen. So all other direct "pixel access" API will create OpenGL texture and draw it to screen for you. But you can do it yourself thus saving time on calling legacy API.